Will Artificial Intelligence Replace Designers?

It works a little something like this: Think of your dream room—any style, anywhere. Can you describe it in a sentence? Good. Type that into the text box. Hit enter and watch as your vision materializes on the screen, steadily gaining clarity as if you were twisting a camera lens. First, you perceive white forms of softly curved furniture and the arched shapes of windows. You then gradually spot blossoms on a branch, intricate moldings, soft bouclé textures. This room you’re seeing may not technically exist, but somehow it’s more real than anything you could have ever imagined.

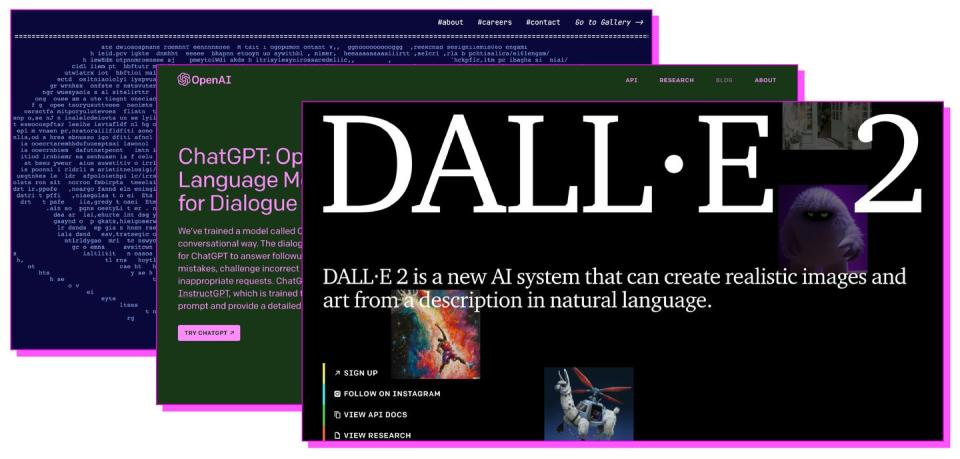

Welcome to the uncanny world of generative AI, the rapidly emerging technology that has confounded critics, put lawyers on speed dial, and awed (and freaked out) pretty much everyone else. Via complex machine-learning algorithms, new platforms with names befitting a sci-fi novel (DALL-E, Stable Diffusion, Midjourney) have the ability to translate simple text commands into incredibly vivid, hyperdetailed renderings. The promise? If you can imagine it, you can materialize it.

You’ve likely already heard of generative AI in the news, probably concerning ChatGPT, a bot developed by the company Open AI that has the preternatural ability to spit out entire essays from simple commands (“An article on generative AI and design,” perhaps?) with jarringly human precision. Just as educators, journalists, and academics are grappling with the implications of this powerful, slightly spooky technology, the design community—which relies on renderings and drawings to produce and communicate ideas—is trying to make sense of how AI-generated images will impact not only the practice of design but also how we talk about it. Suddenly anyone with an Internet connection is a designer, and entire rooms, buildings, cities, and ecosystems can be generated with the ease of texting your best friend—with startling clarity and speed to boot.

***

There’s nothing inherently new about AI for the masses (Spotify uses AI to serve you a new earworm; this journalist uses a machine learning–assisted tool to transcribe her interviews), but the rate at which these largely open-source technologies have advanced has shocked nearly everyone—experts included. DALL-E, another Open AI product, launched one year ago, while ChatGPT debuted just two months ago. Advances in text-to-video and text-to-3D-images could be on the scene in a matter of months, if not weeks; this technological leapfrogging has tossed Moore’s Law—the concept that computing power doubles roughly every two years—entirely out the window.

“People have been creating images with AI for 15 years,” says architect Andrew Kudless, principal of the Houston-based studio Matsys Design. “But [back then], all it could produce were super-psychedelic images of, like, dogs’ faces made of other dogs’ faces or the Mona Lisa made out of cats. What’s happened in the past year is that the technology has gotten much, much better. And it’s also become much more accessible.” How accessible? In a matter of minutes, this journalist managed to sign up for a Midjourney account (one of the most popular text-to-image platforms) and began rendering a fantastic Parisian living room worthy of an ELLE DECOR A-List designer.

“Has it taken architecture by storm? Yes,” says Arthur Mamou-Mani, an architect based in London with a practice that specializes in digital design and fabrication (among his studio’s designs was a spiraling timber temple that was set ablaze during the 2018 edition of Burning Man). “Usually when you're an architect, you have an idea, you sketch, you go on [the CAD software] Rhino, you start modeling it, you tweak it, then you have to render it,” he explains. “[With generative AI], you have an idea, you start typing some words, and boom, you get the final renderings. The immediacy of the results versus the idea has never been that quick, which means you can iterate extremely fast.”

He shares his screen to demonstrate Midjourney. “Imagine a city of the future, New York, with wood and vegetation everywhere, rising seawater, like Venice,” he ad-libs, typing rapidly into the chat thread. Forty-five seconds later, a futuristic version of Manhattan’s Battery appears, with torquing towers, flying cars, verdant canals, and floating gondolas. Admittedly, it’s a little funky (think Zaha Hadid meets Sim City), but that’s the point. “It’s a more involved mood board,” Mamou-Mani explains; he typically works to edit and refine the ideas presented to him by the bot. “You spend less time on the digital screen because you’re getting answers faster”—and, by extension, more time realizing ideas in the physical world.

Architect Michel Rojkind agrees. “We need to get into these technologies—there is no way out,” he says via Zoom from his light-filled Mexico City office. “We need to understand them, at least, to figure out where everybody else is going and what's going to happen.”

Like Mamou-Mani, Rojkind sees AI-generated images as a means to reach design solutions more rapidly and then augment and test them using existing tools. “It’s like ‘exquisite corpse,’” he adds, referring to the old Surrealist parlor game. “Rather than copying, it’s translating.”

At present, Rojkind and his studio have been exploring text-to-image platforms like DALL-E and Midjourney, for the design of an eye clinic and the graphic identity for a brand of chocolate. “Don’t get me wrong. I mean, I’m still doing this,” he says emphatically, holding up a sketchbook. “It’s not polarizing, like one or the other. It’s not black or white. It’s like, ‘Guys, there’s all this possibility; there’s this amazing range of things that we can do now.’ That to me is what’s interesting—that cross-pollination of information.”

***

But when it comes to using these new tools, you have to know what you’re doing—and temper your expectations. “If you go into it knowing exactly what you want, you’re going to be disappointed,” says Kudless, who estimates that he has created some 30,000 AI-generated images. “It’s like talking to someone. The reason you talk to someone isn’t because you know exactly what they’re going to say. You want to have a conversation; otherwise it’s completely boring.”

“Say I want an interior that is furry and has a lot of mirrors,” posits Jose Luis García del Castillo y López, a professor at Harvard’s Graduate School of Design and a self-described “recovering architect.” “The thing would generate an image, and it’s going to be glitchy and you’re not going to use it right away. But by doing it over and over again and learning what words trigger changes in the images you get, all of these images become suggestive. All of these images are very inspirational.”

García del Castillo y López, who pivoted from an architecture career to focus on computational design, sees AI-generated images no differently than Pinterest boards or Taschen coffee-table books. “We’re going to act as curators of the information that we will generate ourselves synthetically,” he insists. “The value here is not anymore about the creation; it’s about the curation.”

Already, AI cottage industries are popping up around this idea. The website Promptbase, for instance, sells text commands to reach your desired aesthetic faster. For $1.99, you can purchase a file to help you generate “Cute Anime Creatures in Love,” or for $2.99, slick interior design styles.

***

As much as designers are seeing a world of potential for text-to-image technology, there is plenty of debate, pearl-clutching, and straight-up ambivalence toward it. The New York–based architect Michael K. Chen falls into the latter category. “To the degree that people use tools like Instagram to find image-based inspiration, tools like AI are interesting and useful,” he says. “But I think that like anything else, it’s garbage in, garbage out.”

His feelings come down to how he sees his practice, one that is centered on attributes that AI alone cannot delineate, like context and social values. “There is a promising future out there. Right now, it’s already taking the place of a lot of technical or production-oriented tasks,” he acknowledges. “The manner in which it starts to supersede or take over our creative tasks is super-interesting and terrifying. But I’m also not particularly worried.”

Chen’s thoughts raise another question that has been plaguing creatives and aesthetic theorists since the dawn of the photograph: authorship and the meaning of art itself. Despite the singularity of each image that DALL-E or Midjourney spits out, every text-to-image platform needs to be “trained”—a process that entails scraping the alt-text and keywords of billions of images across the Internet. Earlier this month, Getty Images announced plans to sue Stability AI, the company that operates the text-to-image platform Stable Diffusion, for copyright infringement. (The handy website Have I Been Trained? allows you to compare your own images to those generated from popular AI models; images from ELLE DECOR, this writer found, can have upwards of 90 percent similarity with AI-generated ones.)

“When the conversation is framed around ‘AI is generating art,’ that’s when I think the conversation is misleading,” insists García del Castillo y López. “These models—DALL-E, Midjourney, whatever—they don’t generate art; they generate images. It’s very different. Everything is just statistics. It’s scary-good statistics, but it is statistics.”

Kudless, who is also a professor at the University of Houston’s Hines College of Architecture Design, has emerged as an accidental architectural spirit guide for the AI-obsessed, delving into these problems and questions on his Instagram account, which has more than 100,000 followers. With his students, for example, he found that out of all the Pritzker Prize–winning architects referenced in AI-generated images, the work of Tadao Ando and Zaha Hadid trounced virtually every other architect.

Some of his other findings, however, have been more pernicious. In one experiment, he tried entering strings of random letters—essentially keyboard mashing—into Midjourney. The randomized prompts universally generated images of ethereal, wide-eyed (and largely white) young women, many of which, disturbingly, featured some kind of facial injury or bruising. Both experiments raise questions about the types of images and value systems with which these seemingly innocuous systems are “trained.” ( “Assets are generated by an artificial intelligence system based on user queries,” Midjourney states on its website. “This is new technology and it does not always work as expected.”)

There’s also concern over the environmental impact of generative AI tools, which require vast amounts of computing power, and therefore energy, to continue churning out responses, as Vera van de Seyp, a student and researcher at MIT’s Media Lab, points out: “I think our group feels a little bit ambivalent about this change, exactly because of these kinds of questions. Is it ethical to have that amount of energy consumption or stealing work from artists?”

“It has a really big potential of being life-changing, and that’s what I want to focus on,” she continues. “But it’s still important to realize the cost of the tool you’re using—and if it’s worth it.”

***

For many architects and designers, it might be too soon to tell. Architect David Benjamin, principal of forward-thinking firm the Living, is delving headfirst into these questions with his students at Columbia University’s Graduate School of Architecture, Planning and Preservation. In fact, he’s devoted a portion of his 2023 schedule to AI in a course called “Climate Change, Artificial Intelligence, and New Ways of Living.” “I’m not necessarily a total proponent, but I’m fascinated,” he says. “If we don’t get in there and apply our own critical thinking to these tools, and if we don’t develop hypotheses about productive ways to use them—or warnings about how not to use them—then others will do it without us.”

Benjamin spoke to ELLE DECOR on the first day of the new semester. Before the course began, he gave his students a little homework assignment: to present a series of AI-generated images. “If you just walked casually by our session from today, you could be forgiven for mistaking it for the final review,” he says. Benjamin believes that a technology as powerful and as fast as generative AI might hold the key to unlocking solutions for equally powerful and fast problems, like the climate emergency, our housing crisis, or social justice. “Incremental approaches won’t get us there quickly enough; gradual improvements in efficiency won’t get us there,” he insists. “Some people have said, ‘We can’t efficiency our way out of this problem.’ So that’s where maybe the wild stuff has a role to play.”

There’s still a long way to go before AI will be able to generate fully realized buildings with the push of a button, the experts agree. “AI is not there yet. And it’s not going to be there anytime soon,” García del Castillo y López says. “Architecture, design, and interior design are very, very open-ended problems.”

But for many, the value is already apparent. “I was very nostalgic at some point as an architect for firms like Archigram and people like Buckminster Fuller,” Rojkind says. This moment, he reflects, feels like a return to that bolder, freer time—one that offers “the possibilities of just dreaming out loud.”

You Might Also Like