Why Brands Need to Be Aware of YouTube’s New AI Disclosure Rules

Given that machine-made content is becoming startlingly good, fighting the good fight against misinformation — read: sketchy deep fakes and visual AI trickery — should never go out of style. For YouTube, that means requiring account-holders to fess up when they upload artificial intelligence-generated content. At least in some cases.

The goal, according to YouTube’s announcement on Monday, is to boost transparency and thwart viewer confusion in an era when the growing ubiquity of consumer AI tools makes it easier than ever to create and disseminate misleading content.

More from WWD

AI's Influence on Design, Supply Chain, Commerce in the Spotlight at Next e-P Summit

Bolt and Checkout.com Announce Strategic Partnership for Enhanced E-commerce Experience

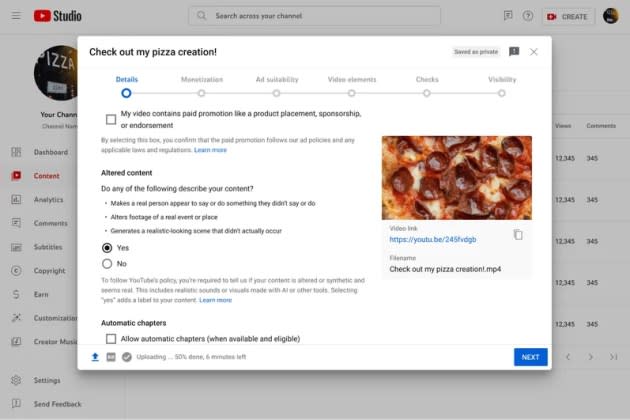

The new rules govern realistic-looking “altered or synthetic content,” so they don’t apply to videos that are obviously phony or fantastical. According to YouTube’s announcement, the policy kicks in when AI fakery involving real people, places or scenarios can pass for the real thing. Think deep-fake videos or audio, a real-world building lit up by a phony but believable virtual fire or a digitally created tornado heading toward an actual town. If any of those apply, creators must fill in the checklist when they upload to Creator Studio, so the system can put a disclosure label on the video.

This doesn’t apply to ads. In marketing-speak, the change targets “organic content” only — which could have brands and advertisers breathing a sigh of relief.

Not so fast.

One of the rules features an interesting word choice. Disclosure is required for “using the likeness of a realistic person,” not a “real” person. That looks like a bit of a gray area, so WWD contacted YouTube for more clarity on these new guidelines.

YouTube spokesperson Elena Hernandez pointed to company language referring to organic content that is “meaningfully altered or synthetically generated when it seems realistic. That would include a realistic person or a real person appearing to say or do something they didn’t do….[But] creators don’t need to disclose when they used generative AI for inconsequential changes like beauty or lighting filters.”

Fashion brands, however, could accidentally violate the rules. Plenty of apparel companies use AI fashion models and other altered content — like digital clothing placed on human models, to show what the physical version would look like on different body shapes and sizes.

Run them as YouTube ads, and there’s no problem, Hernandez confirmed.

But plenty of fashion brands create ads and promo content for other social channels, video platforms or TV commercials, then upload them to their YouTube account to grow the organic audience or ask creators to amplify it, hoping to see the vid go viral and create buzz.

According to Hernandez, those are no-no’s. In both cases, “[it] would require the creator to disclose the use of altered or synthetic media per our policies,” she told WWD. “They would have to make the AI disclosure.”

For now, there’s no specific penalty for breaking the rules, even for repeat offenders. The company will share more about enforcement later, it said, but viewers should start noticing the labels on their phones, TVs and desktop browsers in a matter of weeks. YouTube added one more thing: When it comes to accountholders that insist on ignoring the new AI rules, it might just go ahead an label offending videos anyway.

Brands and creators both should pay attention, if they don’t want to be called out for misleading the public on online video’s top purveyor. According to Nielsen, YouTube holds the top spot for TV streaming and, as a social media platform with 2.49 billion active users, it is second only to Facebook, with 3.05 billion active users.

Best of WWD